The Expectation of Humanity: Rethinking Credit in the Age of AI

When I first started training people to use AI, one question that came up all the time was:

"How and when do I give credit to AI?"

Whether we were talking about written content, policies, or images, there was a persistent concern about when to cite it. A year ago, people wanted rules and clear guidelines around this topic. They were nervous about taking credit or giving the appearance of creating something they didn’t feel was entirely their own.

A year later, it seems we've pivoted 180 degrees.

In the past few months of onboarding people to ChatGPT, I haven’t been asked that question—not even once. It went from being one of the first questions I heard to not being asked at all.

So, what does this mean?

Evolution of Technology Adoption

I think it means our perception of AI is changing, and we're viewing and adopting AI as a tool rather than as an entity to whom credit is due. The gradual adoption of AI in the public sector space has also helped normalize it, meaning we now expect it to be used in the normal course of work, rather than as an exception. We’re starting to view AI as infrastructure. It’s there. It’s working. And increasingly, it’s just part of how we think, write, and build.

What we’re seeing around us, and experiencing firsthand, is the natural evolution of technology. Expectations are shifting, becoming less about giving credit and more about sharing resources. Much like tradespeople comparing which wrench, blade, or software they used to get the job done, people are sharing which AI platform they're using so others can learn from it, try it, or compare tools.

The focus is shifting from authorship to craftsmanship. I think that is an important distinction.

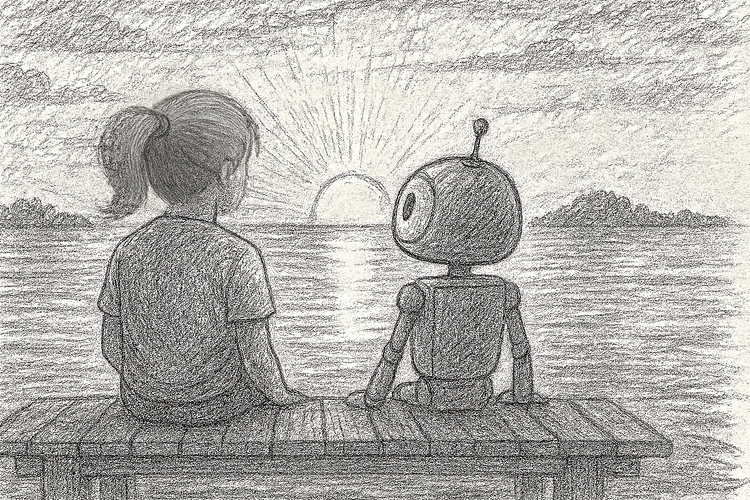

On the Expectation of Humanity

But to back up a bit and to clarify: when I say we’ve stopped asking how to credit AI, I’m referring to everyday, common uses, such as drafting emails, summarizing notes, generating placeholder images, or brainstorming with tools like ChatGPT.

I’m not talking about situations where authorship, ethics, or public trust are at stake or situations where the public expects a human but is delivered AI instead. In those cases, transparency still matters—and I believe it should always matter.

I refer to this concept as the "expectation of humanity".

In many regards, it would be useful to think about and navigate this space similarly to how we think about and navigate the expectation of privacy. It's new but not that different than other tricky concepts we have navigated in the past. We can do it.

In the meantime, there's a definite correlation in the rate of adoption when people aren't worried that every time they use AI, they have to explain precisely what parts are 100% their own thinking and which are AI-assisted. Can you imagine if you had to worry about indicating every time you used a calculator or spellcheck before hitting publish? When that fear fades, people engage more openly and naturally, and that’s how real innovation takes root.

And that’s the evolution I’m here for.

Comments ()